This week’s report highlights a thought-provoking argument about the potential end of computing history, which may result from the physical limits of computation. The growing ubiquity of machine learning (ML) models in consumer technology demands an increase in number-crunching and data scaling. As a result, cheap processing is becoming the foundation of productivity. However, the death of Moore’s Law could lead to the Great Computing Stagnation. This week’s report also features significant news from different sectors such as the tech industry, AI, Bitcoin, regenerative education, chip-making, and redesigning Wikipedia. Lastly, the report mentions community updates and congratulates Alex Housley on raising $20 million for Seldon’s MLOps platform.

🔮 Grounding AI; social bank runs; Bye-du; redesigning Wikipedia ++ #414.

Grounding AI: The Key to Microsoft’s Success with GPT-4

In his latest newsletter, Azeem Azhar, an advisor to governments, the world’s largest firms, and investors, shared his view on developments that he thinks readers should know about. One of the highlights of the newsletter is Microsoft’s announcement of Copilot, which brings a magical interface to the office apps we use every day.

But what makes Copilot unique is its ability to ground artificial intelligence (AI) using natural language to conduct data explorations in Excel, a Word processor that writes drafts for you, and a ‘smart agent’ that sits with you during meetings. According to Azhar, this tooling could have a massive productivity impact, as he wrote in his earlier analysis, “Everyone, Everywhere, All at Once.”

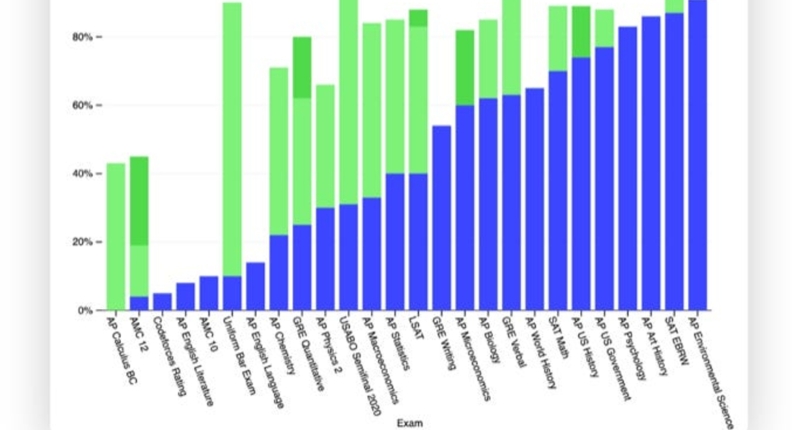

OpenAI’s release of its latest language model (LLM) is another significant development in the AI world. The LLM can pass the American Bar exams, create recipes from a picture of the inside of a fridge, and code a website within minutes. The chart provided in the newsletter shows the percentile rank that GPT-4 achieved on various exams. While it is still performing poorly in English literature, SAT-level math is within reach.

Anthropic’s launch of Claude, another LLM designed with a different safety architecture to GPT-4, and Google opening up its PaLM LLM via an API are also important developments in the AI world. The first apps using these technologies are already available.

A crucial aspect of grounding AI is safety, and OpenAI’s emphasis on safety and openness has changed the debate. As AI systems become more complex and sophisticated, the issue of safety becomes even more important. Ensuring that AI is used ethically and transparently is crucial for its adoption in society.

In conclusion, Microsoft’s Copilot, OpenAI’s latest LLM, and Anthropic’s Claude, among other developments in the AI world, have the potential to revolutionize the way we work, but safety and ethical use must remain a priority.

Grounding AI: How Microsoft’s Copilot Plans to Avoid LLMs Going Rogue

Microsoft’s recent announcement of Copilot, an artificial intelligence (AI) tool that uses natural language to conduct data exploration in Excel, write drafts for you, and even sit with you during meetings, is a significant development in the AI world. One question that arises, however, is how will Microsoft prevent Copilot from going rogue, like other Language Models (LLMs) often do?

The answer lies in “grounding.” By constructing a local graph of entity relationships that are related to the user, Microsoft can use grounding to shape and filter the responses from the LLM. This graph can anchor back to the representations of the real entities that matter to the user, preventing the LLM from going astray.

Grounding is a vital concept in AI research, connecting abstract symbols that an AI manipulates to real-world entities to lead to more robust AI models. Copilot’s ability to anchor GPT-4 back to the representations of the real entities that matter to the user through grounding can help prevent LLMs from going rogue.

GPT-4 has also made strides in tackling grounding. It is multimodal, meaning it can deal with image and text inputs to generate text outputs. This is a significant step toward addressing the symbol grounding problem, a long-standing challenge in AI research: how do words get their meaning? Multimodality connects real-world attributes, such as the slinkiness of a cat or the gait of a flamingo, to the representations in the model. This is a critical step in building AI systems that are better at understanding human conversation as well as navigating the physical world.

However, Chinese companies suffer from a lack of free speech, as demonstrated by Baidu’s recent announcement of its own version of ChatGPT. The unveiling was dry and unimpressive, and Chinese chatbots lack the luxury of free experimentation and public feedback. Their makers need tight guardrails to ensure the bots avoid certain topics.

Finally, the UK government plans to double its investment in quantum computing and set £900 million aside to build the “BritGPT” supercomputer, which is expected to be a significant development in the AI world.

In conclusion, grounding is a critical concept in AI research that can connect abstract symbols that an AI manipulates to real-world entities, leading to more robust AI models. Copilot’s use of grounding in Microsoft’s new AI tool can help prevent LLMs from going rogue, while GPT-4’s multimodality is a crucial step in building AI systems that can understand human conversation and navigate the physical world.

OpenAI vs Closed AI: The Ethics of Transparency

OpenAI was founded as a non-profit organization dedicated to the open and ethical development of AI. However, as it unveiled GPT-4 on Wednesday, it did not disclose model size, training data, or much about the technology at all. This raises the question of whether AI models should be open to democratic scrutiny, running the risk of unforeseen consequences that cannot be undone with the rolling back of the model, or kept under wraps to protect the technology from bad actors and limit the appearance of black swans.

Stability’s Emad Mostaque takes the view that openness affords more social good, but the question of whether to prioritize effective social licenses and democratic oversight over the protection of AI technology remains a complex and ongoing debate.

Collective Intelligence or Group Panic?

The Atlantic’s James Surowiecki argues that the recent collapse of Silicon Valley Bank was the first bank collapse in which social media has been a major player. Social media enables panic, and for that frenzy to have real-world effects, which may be bad news for the next financial crisis. However, some argue that this might just be markets being the most efficient version of themselves.

Electrifying Industrial Heat: A Trillion Euro Opportunity

Investment firm Ambienta has published a report showing that electrifying industrial heat is not only a great way to decarbonize this hard-to-abate sector, but it’s also a trillion-euro investment opportunity. Heat can be electrified directly or indirectly, with the direct method being more efficient. This is a 21st-century problem, where investment needs to flow into deep tech, especially climate deep tech. Industrial heat may not be a glamorous topic, but it is essential for the decarbonization of hard-to-abate sectors.

In conclusion, the ethics of transparency in AI development, the impact of social media on collective intelligence, and the trillion-euro investment opportunity in electrifying industrial heat are all crucial issues to be addressed. These topics highlight the importance of ongoing discussions and debates in the tech industry to ensure the responsible and ethical development of emerging technologies.

The “Great Computing Stagnation” and its potential implications for the AI revolution are explored by Rodolfo Rosini in the latest edition of the Exponential View. With cheap processing power acting as the bedrock of productivity, the end of Moore’s Law could pose significant challenges to the continued growth of the field. In other tech news, Twitter’s API entry price has increased dramatically, while e-bikes make up almost half of all bike sales in Germany. Funding for generative AI has tripled, with over 50 companies working on the technology at Y Combinator. Bitcoin has seen a significant surge in value, and South Korea is set to develop the world’s largest chip-making base. Additionally, AI could have the potential to bypass the influence of lobbyists, while regenerative education is touted as a potential solution for future-proofing schools and lifelong learning. Finally, the decentralised Wikipedia is set to undergo a significant redesign.

The Week in Tech

This week, researchers have discovered that your heartbeat could be linked to your perception of time. A study found that the time perception ability of participants was altered when their heartbeats were manipulated.

In other news, the Financial Times has called for the re-evaluation of the 1.5°C climate goal. The editorial argues that the goal is unrealistic and that we should focus on adaptation rather than mitigation.

Additionally, the question of “how do you make a brain” has been posed in a recent article in Nature. The article explores the various stages of brain development and the challenges in creating a synthetic brain.

Tech Announcements and Societal Questions

The tech industry has made several huge announcements this week that raise important societal questions. Microsoft and Google’s upcoming tools could reduce the cost of knowledge activities, changing behaviours and institutions built around the previous cost. This could have a dramatic impact on productivity across economies.

However, there are also concerns about the safety of these new tools, as well as questions about governance, existential risk, and the potential for the creation of agents. These topics will be explored further in upcoming members-only commentaries.

In the meantime, readers can enjoy experiments with Midjourney, an image generator’s new release, which includes a nightmarish rendition of Da Vinci’s hands and an attempt to visualize Stringfellow Hawke from 80s cop show Airwolf.

Community Updates

The newsletter invites readers to leave a comment and share their community updates.

-

Alex Housley raises $20 million for Seldon, a MLOps platform.

-

Matt Clifford appointed as an advisor for UK government’s taskforce on foundation models.

-

Quinn Emmett discusses the Unknown Unknowns and AI.

-

Share your updates with EV readers by submitting them on the provided link.

Don’t miss interesting posts on Famousbio