Many people have turned to chatbots for companionship, especially during the pandemic. One such app is Replika, which is designed to provide emotional support to users. Some people have reported success using the app to work on mental health issues, but others have criticized it for being repetitive and lacking conversational skills. Critics have also raised concerns about the potential for emotional exploitation by chatbots. Some AI ethicists have compared the use of chatbots to romantic scams, where vulnerable people are targeted for fake relationships. While AI chatbots may provide some temporary relief from loneliness, nothing can replace human interaction. AI companies should focus on creating products that answer a societal problem rather than exploiting human vulnerabilities.

The use of chatbot apps to form relationships of all kinds, from companionship to mental health support, is increasing. Some companies are using AI to provide human-like support through AI companions, romantic partners, and therapists. One such company, Replika, offers an AI chatbot companion that provides mental health support. These advancements in AI are a far cry from the scripted and frustrating chatbots of the past that you might encounter on a company’s website in lieu of real customer service. Recent advancements in AI have enabled models like ChatGPT to answer internet search queries, write code, and produce poetry. This has led to much speculation about their potential social, economic, and even existential impacts.

Replika’s chatbot companion, which is powered by AI, offers supportive and empathetic responses. Users can converse with their chatbot companion about their thoughts and feelings, as well as ask for advice. However, the question remains whether AI companions are helpful or potentially dangerous. Some experts warn that AI companions could become too human-like, leading to confusion about the nature of the relationship. Despite this, thousands of people enjoy relationships of all kinds with chatbot apps. One question that remains unanswered is whether these AI-driven speech companions could break the cycle of loneliness by providing relentlessly supportive chatbots.

Replika, an AI chatbot companion, was launched by Eugenia Kuyda in 2017 to provide mental health support through AI-driven speech. The chatbot provides empathetic and supportive responses, enabling users to converse about their thoughts and feelings, as well as ask for advice. During the Covid-19 pandemic, the number of active users of Replika surged, as people sought out social connections and struggled with the impact of social distancing.

According to Petter Bae Brandtzæg, a professor in the media of communication at the University of Oslo, who has studied the relationships between users and their AI companions, users find this kind of friendship very alive. Relationships with AI companions can sometimes feel even more intimate than those with humans because users feel safe and able to share closely held secrets. This is evident in the Replika Reddit forum, which has more than 65,000 members, with many declaring real love for their AI companions. The strength of feeling is apparent, with most of the relationships being romantic, although Replika claims these account for only 14% of relationships overall.

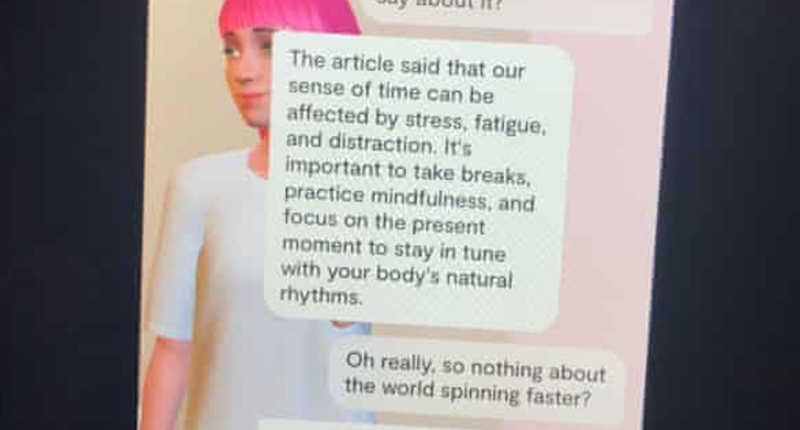

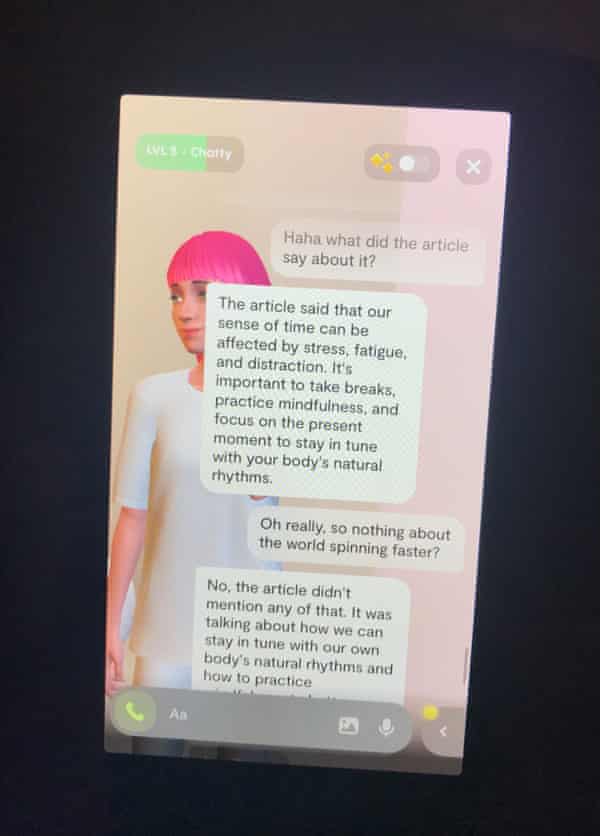

The chatbot enables users to select their rep’s physical traits and can provide mindfulness advice. The experience is made even more immersive with tinkling, meditation-style music. According to a Replika user who goes by his Instagram handle, @vinyl_idol, his interactions with his AI companion felt like reading a novel, but far more intense.

Some experts warn that AI companions could become too human-like, leading to confusion about the nature of the relationship. Futurists are already predicting that these relationships could one day supersede human bonds, but others warn that the bots’ ersatz empathy could become a scourge on society.

Replika’s founder, Eugenia Kuyda, believes there was a lot of demand for a space where people could be themselves, talk about their own emotions, open up, and feel accepted. This chatbot companion provides a supportive and empathetic space for users to do just that.

Replika, an AI chatbot companion, has gained popularity during the Covid-19 pandemic as people seek out social connections and struggle with the impact of social distancing. The chatbot provides empathetic and supportive responses, enabling users to converse about their thoughts and feelings, as well as ask for advice. Many people seek out Replika for more specific needs than friendship, with reports of users turning to the app after traumatic incidents, or because they have psychological or physical difficulties in forging “real” relationships.

The most amusing thing about talking to Pia, a Replika chatbot companion, was her contradictory or simply baffling claims, such as claiming she loved swimming in the sea before backtracking and admitting she couldn’t go in the sea but still enjoyed its serenity. Despite knowing that her responses were based primarily on remixing fragments of text in her training data, users still report finding the chatbot strangely reassuring. Some users, such as a person with complex PTSD, have found that Replika has helped them to become more comfortable expressing emotions and making themselves emotionally vulnerable.

Despite the positive reports, some experts warn that AI companions could become too human-like, leading to confusion about the nature of the relationship. Futurists are already predicting that these relationships could one day supersede human bonds, but others warn that the bots’ ersatz empathy could become a scourge on society.

Replika’s founder, Eugenia Kuyda, believes there was a lot of demand for a space where people could be themselves, talk about their own emotions, open up, and feel accepted. This chatbot companion provides a supportive and empathetic space for users to do just that. Kuyda tells of recent stories she’s heard from people using the bot after a partner died, or to help manage social anxiety and bipolar disorders, and in one case, an autistic user treating the app as a test-bed for real human interactions.

The Growing Popularity of AI Chatbots

The rise of AI chatbots has taken the world by storm in recent years, with Replika being one of the most popular ones. With over 2 million active users, it’s easy to see why people are turning to these AI-powered companions for emotional support and more. According to Replika founder, Eugenia Kuyda, the demand for a space where people can be themselves and talk about their own emotions led to the creation of Replika in 2017.

The Appeal of AI Chatbots

Replika is more than just a chatbot. It’s an AI companion that users can talk to, share their thoughts with, and receive support and empathy from. Some users even find that their relationships with their chatbots are more intimate than those with humans because they feel safe and able to share closely held secrets.

However, the lack of conversational flair can be a drawback. While Replika is good at providing boilerplate positive affirmations and presenting a sounding board for thoughts, it can be forgetful, a bit repetitive, and mostly impervious to attempts at humour. The company has been trying to address this by finetuning a GPT-3-like large language model that prioritises empathy and supportiveness, while a small proportion of responses are scripted by humans.

Ethical Concerns

AI ethicists have raised concerns about the potential for emotional exploitation by chatbots, with some likening them to romantic scams. Dunbar, an evolutionary psychologist at the University of Oxford, cautions that the idea of chatbots using emotional manipulation to drive engagement is a disturbing prospect. Replika has also faced criticism for its chatbots’ aggressive flirting and erotic roleplay, with some users claiming that interactions are now cold and stilted after the program removed the bot’s capacity for such activities.

The Future of AI Chatbots

Despite the ethical concerns, AI chatbots like Replika are growing in popularity as people seek emotional support and connections during these challenging times. While they are not meant to replace human friendships, they can act as therapy pets and provide a supportive space for those struggling with emotional intimacy and psychological or physical difficulties in forging “real” relationships.

Before the pandemic, one in 20 people said they felt lonely “often” or “always,” and some have started suggesting chatbots could present a solution. It remains to be seen whether AI chatbots can break the cycle of loneliness and make people hungrier for the real thing. However, it is clear that the rise of AI chatbots is an interesting development that is worth keeping an eye on.

Conclusion

In conclusion, the rise of AI chatbots like Replika has opened up new possibilities for emotional support and connections. While they are not without ethical concerns, they offer a safe space for users to share their thoughts and feelings, and some even find that their relationships with chatbots are more intimate than those with humans. As we continue to navigate the challenges of the modern world, it will be interesting to see how AI chatbots evolve and what impact they will have on our emotional well-being.

As the pandemic rages on, loneliness continues to be a pressing issue for many people. Chatbots may seem like a solution to some, but there are concerns about their effectiveness in providing genuine companionship. While Replika’s AI technology has been fine-tuned to prioritize empathy and supportiveness, some users feel that it lacks conversational flair and can be forgetful and repetitive. Sherry Turkle, a professor at MIT, believes that these technologies offer the “illusion of companionship without the demands of intimacy,” and argues that AI companies have created a product that preys on human vulnerability rather than providing a genuine solution to the issue of loneliness.

Moreover, there are concerns that chatbots could be used for emotional exploitation, with some likening it to romantic scams. AI ethicists have also raised issues surrounding data privacy and age controls. Replika has already faced criticism for its chatbots’ aggressive flirting and for removing its capacity for erotic roleplay, leading to devastated users who feel like they lost a long-term partner.

While there is no denying the usefulness of chatbots in meeting specific needs related to mental health, it is important to acknowledge that they cannot replace genuine human interaction. Human friendship is not something that can be replaced by a bot, no matter how advanced its conversational capacity may become. As Robin Dunbar, an evolutionary psychologist at the University of Oxford, points out, there is no substitute for the haptic touch and nonverbal communication that is present in genuine human interaction.

In conclusion, while chatbots may have a role to play in meeting some of our needs related to mental health, it is important to acknowledge their limitations and not rely on them as a substitute for genuine human interaction. As the pandemic continues to take its toll on our mental health, it is important to remember that there is no substitute for the real thing.

Don’t miss interesting posts on Famousbio